It is estimated that one-third of global internet users are under the age of 18. As digital technologies increasingly mediate nearly all facets of their lives, including their education, young people encounter unique opportunities and risks online. It is imperative to ensure that well-meaning but perhaps rushed efforts to protect youth from risks do not significantly limit their access to valuable opportunities. Rather, these efforts must both protect and empower young people while allowing them to gradually develop autonomy and resilience.

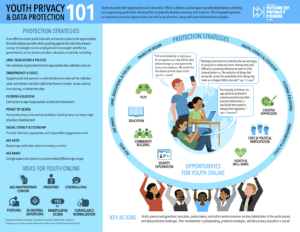

Today, the Future of Privacy Forum (FPF) released a new infographic, Youth Privacy and Data Protection 101, which provides an overview of particular opportunities and risks for youth online, along with potential protection strategies. It also features young people’s voices from around the world on their preferences and attitudes toward privacy.

Risks for youth online include well-known concerns such as coming across age-inappropriate content, encountering predators, and being a victim of cyberbullying or cyber harassment. Other, less visible risks include commercial exploitation through profiling and behavioral advertising as well as societal shifts such as surveillance normalization, as young people may become accustomed to constantly being watched and recorded.

However, there are also a wealth of opportunities for youth online. With school closures due to the pandemic, most students now access their education virtually. Unable to connect with their friends and communities in person, young people rely on social media and other online tools to play, build their communities, explore their identities, and participate in civic and political forums. Online spaces are also integral to fostering creative expression and providing resources related to health and well-being.

The desire to shield youth from risks could block access to opportunities. Conversations regarding protection strategies should consider both the opportunities and risks, to promote the development of a robust, thriving online ecosystem that is also suitable for youth.

To assist these efforts, the infographic includes a range of strategies that actors, including governments, online service providers, educators, and parents, can use and encourage. While not exhaustive, the list displays the diversity of approaches to youth privacy protections being considered and implemented globally. These approaches include the following categories:

- Laws, Regulations, and Policies

Policymakers may set underlying legal protections for appropriate data collection and use by governments, businesses, organizations, and other entities that collect, process, and share young people’s data. Key considerations under debate include determining the appropriate age of digital consent, providing consent rights to the parent or the child, promoting a consent-based or rights-based framework, and relying on comprehensive or sector-based legislation. In the US, the Children’s Online Privacy Protection Act (COPPA) imposes certain requirements related to notice, consent, access, retention, and security on companies with services directed at children under the age of 13. In the EU, the General Data Protection Regulation (GDPR) includes special protections for children, such as requiring parental consent prior to processing children’s data and child-friendly notices. Currently, the GDPR gives individual Member States flexibility in determining the national age of digital consent, between the ages of 13 and 16. However, the European Commission has suggested harmonizing the age across the European Union as a possible future policy development.

- Transparency and Choice

Policies and standards adopted globally increasingly include requirements for young people and their parents to be informed in an accessible manner about how their data will be used and what rights they have. This information can occur through “bite-sized” explanations or “just-in-time” notices, as suggested in the UK Age Appropriate Design Code (Code). Other practices include presenting privacy information in a form that appeals to the user’s age, such as using cartoons, videos, or gamified content, and providing resources for parents to talk to their children about privacy. Consent is also often necessary prior to processing youth data. Consent, whether the parent or the child provides it, must be freely given, specific, informed, and unambiguous. The Irish Draft Fundamentals for a Child-Oriented Approach to Data Processing (Fundamentals) also points out that consent doesn’t change childhood and should not justify treating children as if they were adults.

- Filtering and Blocking

Content filters block access to certain websites and online services. In the US, the Children’s Internet Protection Act (CIPA) requires schools and libraries receiving funding through the E-rate program to filter and block access to age-inappropriate content and interactions. To address concerns about school safety, schools also use filtering and blocking to flag and prevent incidents of self-harm and harm to others. In other countries, like South Korea, telecommunications companies are required to block access to content considered harmful to minors, under the Telecommunications Business Act. - Privacy by Design

Privacy by design refers to embedding privacy protections in the design, operation, and management of products and services. The 7 Foundational Principles, created by Ann Cavoukian, include taking a proactive (not reactive) stance, protecting privacy by default, fully integrating privacy into systems, retaining full functionality of services, ensuring end-to-end security, maintaining visibility and transparency, and keeping things user-centric. The UK ICO’s Code and Irish DPC’s Fundamentals both encourage high privacy protections for youth by design and by default, thereby baking in the protections. - Digital Literacy and Citizenship

Digital literacy and citizenship education and resources can empower young people to take control of their own privacy and promote informed, appropriate, and responsible engagement online. Resources like the London School of Economics’ (LSE) My Privacy UK, Common Sense Media’s Digital Citizenship Curriculum, and the International Society for Technology in Education’s (ISTE) Standards for Students help young people understand privacy and take ownership of their digital lives. - Age Gates

Age gates require age verification prior to accessing a service. With age gates, young people input their date of birth or other identifying information indicating that they are above a certain age, before they are allowed to use a service or purchase a product. For example, age gates are used to restrict youth access to pornography, gambling, and certain social media platforms. Critics claim that age gates are easily circumventable, as users may lie about their age online. Without alternatives, young people are incentivized to lie and gain access to a service lacking adequate privacy protections, or tell the truth and lose access to that service. - Age Bands

Age bands offer different versions of a service or different privacy rights and protections for defined age ranges. The UK Code provides a guide for assessing the different developmental stages and needs of youth based on their age ranges. The code considers ages 0 to 5 as preliterate or early literacy, 6 to 9 as core primary school years, 10 to 12 as transition years, 13 to 15 as early teens, and 16 to 17 as approaching adulthood. A recent example of applying age bands is TikTok’s enhanced default privacy settings for users ages 13 to 15, limiting who can comment on, Duet (record a video alongside another user’s video) and Stitch (clip and integrate scenes from another user’s video) with, and download videos of users in this age range, and turning off the feature that suggests their accounts to others.

Click here to download the full (PDF) infographic.