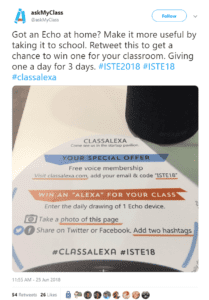

When a technology vendor states that their product is not meant for classroom use, that’s a red flag. This summer, student privacy advocate Bill Fitzgerald specifically asked an Amazon representative about using the Echo in the K-12 classroom and reported that “the person was crystal clear – unequivocably, unambiguously clear – that in the classroom Alexa and Dot posed compliance and privacy issues.” Yet there were several sessions—and vendors—at last summer’s ISTE conference extolling the virtues of Alexa in K-12 classrooms. Teachers report using the devices for basic tasks like math drills and reading aloud. A web search for “Google Home in the Classroom” or “Alexa in the Classroom” will turn up blog posts from enthusiastic educators endorsing the use of voice assistants with students. However, teachers should consider several privacy and security factors before bringing these devices into K-12 classrooms.

First, it’s important to remember that these devices are designed for consumer use, not educational use. Voice assistants such as Amazon Echo and Google Home are used by consumers to help automate common tasks such as home heating and lighting, listening to music, searching the internet, making online purchases, and sending emails. While some consumer tools, like G Suite, have an education version with privacy policies and terms of service agreements specifically geared towards educational use, Amazon Echo and Google Home do not.

First, it’s important to remember that these devices are designed for consumer use, not educational use. Voice assistants such as Amazon Echo and Google Home are used by consumers to help automate common tasks such as home heating and lighting, listening to music, searching the internet, making online purchases, and sending emails. While some consumer tools, like G Suite, have an education version with privacy policies and terms of service agreements specifically geared towards educational use, Amazon Echo and Google Home do not.

Second, the legal implications of using voice assistants in K-12 schools, given the context of state and federal privacy law is, to say the least, murky. The Family Educational Rights and Privacy Act (FERPA), the most significant federal law governing student privacy, was written in 1974, long before artificial intelligence was an educational consideration. The U.S. Department of Education has not issued any formal guidance on the use of voice assistants in the classroom, but their FAQ’s on Photos and Videos Under FERPA provides guidance and examples of situations in which photos and videos would be considered FERPA-protected education records (the same principles would apply for audio recordings).

If an app, tool or device is not designed for classrooms, could be used for a “commercial purpose”–for example, in the case of a voice assistant, could collect data for advertisements or be used to buy a product– and will collect data from children under the age of 13, educators must get parental consent before using it.

Consumer voice assistants like the Amazon Echo and Google Home, when used in classrooms, also risk being not compliant with the Children’s Online Privacy and Protection Act (COPPA), federal legislation that protects the privacy of children under the age of 13. If an app, tool or device is not designed for classrooms, could be used for a “commercial purpose”–for example, in the case of a voice assistant, could collect data for advertisements or be used to buy a product– and will collect data from children under the age of 13, educators must get parental consent before using it. The Federal Trade Commission (FTC) has issued Guidance on COPPA and Voice Recordings that clarifies that if a child is using their voice as substitute for typing (for example, asking a question) in such a way that does not reveal any personal information – such as their name – it would not be considered a violation of COPPA. However, getting parental consent before bringing a voice assistant into the classroom is still the safest option.

Third, plenty of technology experts have voiced concerns about the potential privacy risks these devices pose for consumers. Recent headlines include the story of a family whose private conversation was recorded and sent to a business associate. Judith Shulevitz reports on potential “dangers of allowing our most private information to be harvested, stored, and sold.” Still, it’s one thing if parents choose to purchase a voice assistant for home use, but educators are (and should be) held to a higher standard when it comes to protecting student privacy.

Despite these concerns, there are educators who will still pursue the use of voice assistants in the classroom. While I don’t agree with it, here are some things educators planning to use voice assistants in the classroom should first take into consideration:

- Check with your school or district before bringing a voice assistant into the classroom. Your school or district may already have policies regarding the classroom use of voice assistants, so do your research before bringing one into the classroom. Some schools and districts don’t allow these devices to be used by students due to the privacy, legal, and/or technology-related concerns they pose.

-

- Educate yourself first about student privacy state and federal laws. The Educator’s Guide to Student Data Privacy, from the Future of Privacy Forum and ConnectSafely, is a great place to get started. Student Privacy 101: FERPA for Parents and Students is a helpful video that explains FERPA in plain English. The Utah State Board of Education has an informative and entertaining YouTube playlist covering privacy basics for educators. Be sure to learn about your state laws covering student data privacy; they may be more specific and restrictive than federal law.

- Get parent permission before using a voice assistant in the classroom. Alexa and Google Home were not designed for educational purposes, so at a minimum you need to get parent permission before using them with students under 13. In this Future of Privacy Forum webinar, educator Kerry Gallagher describes the transparent, carefully considered process used by one educator to get student and parent input before bringing a Google Home into her classroom.

- Treat the voice assistant as if it was an outside classroom visitor. Don’t discuss student confidential student information in front of the voice assistant; power off (even unplug) the device. Examples would include (but are not limited to) a conversation with a student, parent or professional colleague about grades or behavior. Don’t use the voice assistant in ways that allows the student voice to be directly associated with an individual student.

- Manage device privacy settings carefully. Both Google Home and Amazon Alexa allow you to view and delete device recordings and manage device permissions. Do your research and limit. If using third party applications with your voice assistant, review their privacy policies carefully. Delete voice recordings regularly.

- Consider how and whether this device will enhance teaching and learning. When introducing a new device to the classroom, consider the opportunity to discuss the choices we make when we introduce these particular technologies into our lives. Also consider the novel contributions a particular technology may make to your teaching. Why do you want to use a voice assistant in the classroom and how will you use it? How can the voice assistant promote deeper learning and critical thinking? How will you use the voice assistant to educate students (and parents) about issues like privacy and digital citizenship? Many of the proposed uses I’ve read about seem more focused on convenience and classroom management than meaningful pedagogy.

In the future, technology vendors may develop voice assistants designed for education which don’t pose the same privacy and legal risks as the classroom use of currently available consumer devices. When that day arrives, I look forward to seeing how educators leverage them to improve student learning. Until then, however, educators should think twice before jumping on the voice assistant bandwagon.

Susan M. Bearden is an education technology consultant for the Future of Privacy Forum and the Chief Innovation Officer for the Consortium for School Networking. She was previously the Senior Education Pioneers Fellow at the U.S. Department of Education’s Office of Educational Technology in 2015-2016, and the Director of Information Technology at Holy Trinity Episcopal Academy in Melbourne, Florida.

Image via www.vpnsrus.com